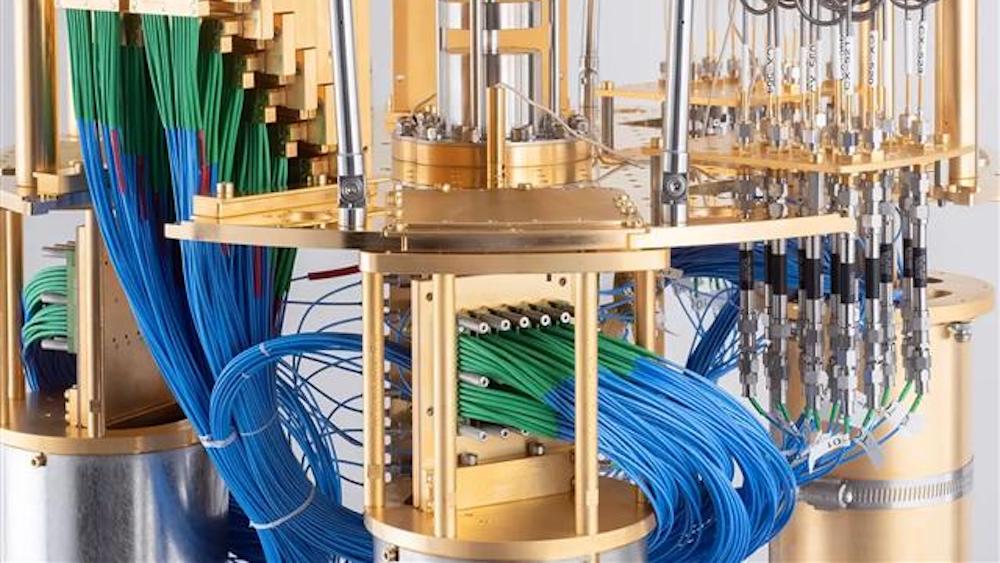

Photo IBM

Usually, we talk about error correction as a distant advancement, one that won’t be useful until we find the right techniques and have built good enough hardware. Indeed, many experts predict that practical fault tolerant quantum computing (FTQC) will require millions of physical quantum bits (qubits) — a number we believe is too large to be practical at this stage of development.

But now, IBM scientists published the discovery of new codes that work with ten times fewer qubits. Practical error correction is far from a solved problem. However, these new codes and other advances across the field are increasing our confidence that fault tolerant quantum computing isn’t just possible, but is possible without having to build an unreasonably large quantum computer.

Correcting errors using codes

Error correction isn’t a new concept, but quantum error correction (QEC) requires a little more thought. Standard classical error correction only needs to correct bit flip errors, where bits accidentally switch from 0 to 1 or vice versa. Quantum computers must correct more kinds of errors, like phase errors which can corrupt the extra quantum information that qubits carry. Not only that, but QEC techniques must correct errors without the ability to copy unknown quantum states, and without destroying the underlying quantum state.

These codes work by encoding logical qubit information across a larger number of physical qubits. A practical error correcting code comes with a few constraints. A QEC code should require only qubit interactions that we can build in practice and at scale. For example, an all-to-all connectivity, or every qubit connected to every other qubit, is not considered practical for large systems.

We also require a code that works on relatively noisy qubits — many scientists believe it unlikely that physical error rates will be reduced much below the ~.0001 scale, or approximately a one-in-ten thousand error rate. Also, the code must supply low enough logical qubit error rates that we can perform complex calculations with our system.

A rough estimate is that the desired logical error rate be below the inverse of the total number of logical operations (i.e. if an algorithm requires 1 million logical operations one should aim for at least a 1e-6 error rate for logical qubits or better). Finally the code must have an acceptable overhead — additional physical qubits increase the cost and complexity of the computer, and if too extreme, they may make the QEC code impractical.

There are many QEC codes under development, representing the different ways you can encode quantum information over physical qubits to protect the information from errors. Some of the most promising codes today are called quantum low-density parity check, or LDPC codes. These codes satisfy many of the practical constraints above, in particular the fact that each qubit is connected to only a few others and errors can be detected using simple circuits without spreading them to too many others.

The surface code is an example of an LDPC code which encodes information into a two-dimensional grid of qubits. Surface codes have been thoroughly studied and have been the up-front approach for many envisioned QEC architectures. Teams of researchers have already been able to demonstrate small examples of surface codes; including related work by IBM.

But for now, surface codes have drawbacks that make them unlikely to be viable for constructing a useful quantum computer: they require too many physical qubits, possibly 20 million qubits for problems of interest. Hence, at IBM we continue to hunt for QEC codes which reduce this overhead. And we’ve found promise in LDPC codes beyond the surface code.

New codes for efficient error correction

IBM scientists have now discovered LDPC codes with a few hundred physical qubits that feature a more than ten-fold reduction of the number of physical qubits relative to the surface code. This is possible because the code encodes more information into the same number of physical qubits, while still showing excellent performance at error rates below 0.001. Furthermore, each qubit is coupled to six others, a reasonable hardware requirement for realizing the error correction protocol.

The surface code uses a 2D lattice of qubits, connected like the edges of the squares on a checkerboard. To reach more efficient codes, like this one, scientists need to break the plane — including edges that curve above the checkerboard and connect distant qubits. At IBM, we are developing such non-local “c” couplers that can create these extra edges.

These hardware requirements, however, are not yet met by present-day superconducting quantum computing processors. The six-way connectivity and need for long wires is more challenging than what we have built at IBM so far. This is the main technological challenge, but not an insurmountable one. We are developing higher degrees of connectivity as well as non-local “c” couplers that act like long wires between distant qubits.

Our no-nonsense path toward quantum advantage

We are researching a continuous path to quantum advantage. Today, error mitigation and suppression techniques are bringing our clients to the edge of quantum advantage. These techniques are a stepping stone towards a world of fault-tolerant computing, and this new LDPC code is bringing that world closer. It’s a promising result pointing us where we should look next for even better error correcting codes.

We’re looking to advance error correction in all directions. Can we find LDPC codes with even better encoding rates and the ability to perform fast error-corrected quantum circuits? This would significantly reduce the cost of error correction and increase our confidence to construct a practical universal quantum computer.

But now, IBM scientists published the discovery of new codes that work with ten times fewer qubits. Practical error correction is far from a solved problem. However, these new codes and other advances across the field are increasing our confidence that fault tolerant quantum computing isn’t just possible, but is possible without having to build an unreasonably large quantum computer.

Correcting errors using codes

Error correction isn’t a new concept, but quantum error correction (QEC) requires a little more thought. Standard classical error correction only needs to correct bit flip errors, where bits accidentally switch from 0 to 1 or vice versa. Quantum computers must correct more kinds of errors, like phase errors which can corrupt the extra quantum information that qubits carry. Not only that, but QEC techniques must correct errors without the ability to copy unknown quantum states, and without destroying the underlying quantum state.

These codes work by encoding logical qubit information across a larger number of physical qubits. A practical error correcting code comes with a few constraints. A QEC code should require only qubit interactions that we can build in practice and at scale. For example, an all-to-all connectivity, or every qubit connected to every other qubit, is not considered practical for large systems.

We also require a code that works on relatively noisy qubits — many scientists believe it unlikely that physical error rates will be reduced much below the ~.0001 scale, or approximately a one-in-ten thousand error rate. Also, the code must supply low enough logical qubit error rates that we can perform complex calculations with our system.

A rough estimate is that the desired logical error rate be below the inverse of the total number of logical operations (i.e. if an algorithm requires 1 million logical operations one should aim for at least a 1e-6 error rate for logical qubits or better). Finally the code must have an acceptable overhead — additional physical qubits increase the cost and complexity of the computer, and if too extreme, they may make the QEC code impractical.

There are many QEC codes under development, representing the different ways you can encode quantum information over physical qubits to protect the information from errors. Some of the most promising codes today are called quantum low-density parity check, or LDPC codes. These codes satisfy many of the practical constraints above, in particular the fact that each qubit is connected to only a few others and errors can be detected using simple circuits without spreading them to too many others.

The surface code is an example of an LDPC code which encodes information into a two-dimensional grid of qubits. Surface codes have been thoroughly studied and have been the up-front approach for many envisioned QEC architectures. Teams of researchers have already been able to demonstrate small examples of surface codes; including related work by IBM.

But for now, surface codes have drawbacks that make them unlikely to be viable for constructing a useful quantum computer: they require too many physical qubits, possibly 20 million qubits for problems of interest. Hence, at IBM we continue to hunt for QEC codes which reduce this overhead. And we’ve found promise in LDPC codes beyond the surface code.

New codes for efficient error correction

IBM scientists have now discovered LDPC codes with a few hundred physical qubits that feature a more than ten-fold reduction of the number of physical qubits relative to the surface code. This is possible because the code encodes more information into the same number of physical qubits, while still showing excellent performance at error rates below 0.001. Furthermore, each qubit is coupled to six others, a reasonable hardware requirement for realizing the error correction protocol.

The surface code uses a 2D lattice of qubits, connected like the edges of the squares on a checkerboard. To reach more efficient codes, like this one, scientists need to break the plane — including edges that curve above the checkerboard and connect distant qubits. At IBM, we are developing such non-local “c” couplers that can create these extra edges.

These hardware requirements, however, are not yet met by present-day superconducting quantum computing processors. The six-way connectivity and need for long wires is more challenging than what we have built at IBM so far. This is the main technological challenge, but not an insurmountable one. We are developing higher degrees of connectivity as well as non-local “c” couplers that act like long wires between distant qubits.

Our no-nonsense path toward quantum advantage

We are researching a continuous path to quantum advantage. Today, error mitigation and suppression techniques are bringing our clients to the edge of quantum advantage. These techniques are a stepping stone towards a world of fault-tolerant computing, and this new LDPC code is bringing that world closer. It’s a promising result pointing us where we should look next for even better error correcting codes.

We’re looking to advance error correction in all directions. Can we find LDPC codes with even better encoding rates and the ability to perform fast error-corrected quantum circuits? This would significantly reduce the cost of error correction and increase our confidence to construct a practical universal quantum computer.

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits