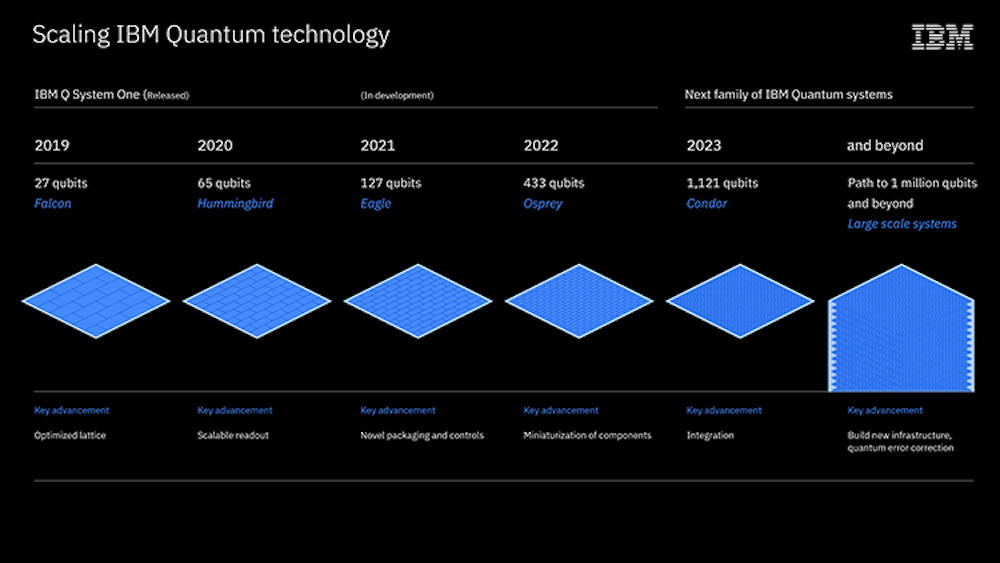

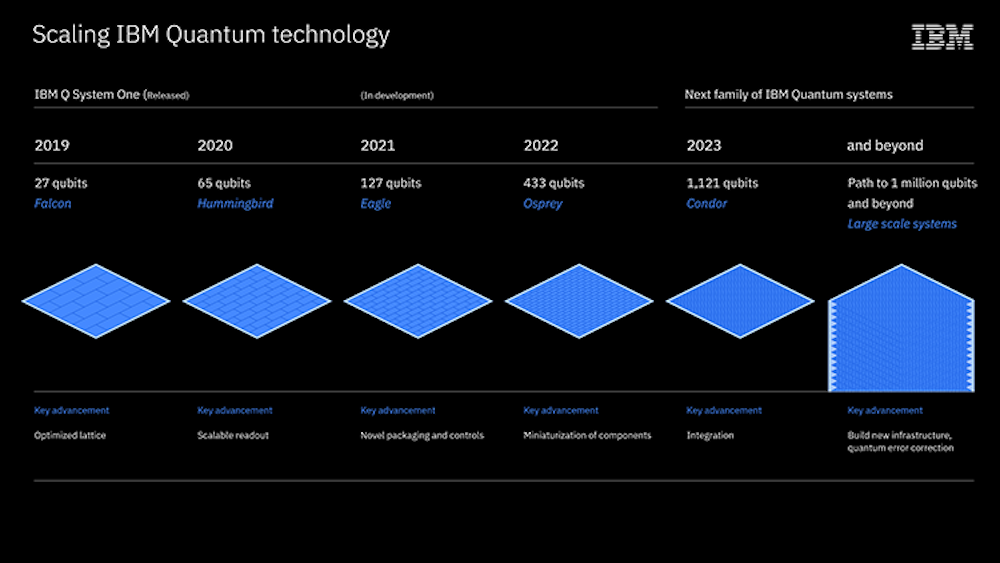

Today, we are releasing the roadmap that we think will take us from the noisy, small-scale devices of today to the million-plus qubit devices of the future. Our team is developing a suite of scalable, increasingly larger and better processors, with a 1,000-plus qubit device, called IBM Quantum Condor, targeted for the end of 2023. In order to house even more massive devices beyond Condor, we’re developing a dilution refrigerator larger than any currently available commercially. This roadmap puts us on a course toward the future’s million-plus qubit processors thanks to industry-leading knowledge, multidisciplinary teams, and agile methodology improving every element of these systems. All the while, our hardware roadmap sits at the heart of a larger mission: to design a full-stack quantum computer deployed via the cloud that anyone around the world can program.

Members of the IBM Quantum team at work investigating how to control increasingly large systems of qubits for long enough, and with few enough errors, to run the complex calculations required by future quantum applications. Credit: Connie Zhou for IBM

Members of the IBM Quantum team at work investigating how to control increasingly large systems of qubits for long enough, and with few enough errors, to run the complex calculations required by future quantum applications. Credit: Connie Zhou for IBM

The IBM Quantum team builds quantum processors—computer processors that rely on the mathematics of elementary particles in order to expand our computational capabilities, running quantum circuits rather than the logic circuits of digital computers. We represent data using the electronic quantum states of artificial atoms known as superconducting transmon qubits, which are connected and manipulated by sequences of microwave pulses in order to run these circuits. But qubits quickly forget their quantum states due to interaction with the outside world. The biggest challenge facing our team today is figuring out how to control large systems of these qubits for long enough, and with few enough errors, to run the complex quantum circuits required by future quantum applications.

IBM has been exploring superconducting qubits since the mid-2000s, increasing coherence times and decreasing errors to enable multi-qubit devices in the early 2010s. Continued refinements and advances at every level of the system from the qubits to the compiler allowed us to put the first quantum computer in the cloud in 2016. We are proud of our work. Today, we maintain more than two dozen stable systems on the IBM Cloud for our clients and the general public to experiment on, including our 5-qubit IBM Quantum Canary processors and our 27-qubit IBM Quantum Falcon processors—on one of which we recently ran a long enough quantum circuit to declare a Quantum Volume of 64. This achievement wasn’t a matter of building more qubits; instead, we incorporated improvements to the compiler, refined the calibration of the two-qubit gates, and issued upgrades to the noise handling and readout based on tweaks to the microwave pulses. Underlying all of that is hardware with world-leading device metrics fabricated with unique processes to allow for reliable yield.

Simultaneous to our efforts to improve our smaller devices, we are also incorporating the many lessons learned into an aggressive roadmap for scaling to larger systems. In fact, this month we quietly released our 65-qubit IBM Quantum Hummingbird processor to our IBM Q Network members. This device features 8:1 readout multiplexing, meaning we combine readout signals from eight qubits into one, reducing the total amount of wiring and components required for readout and improving our ability to scale, while preserving all of the high performance features from the Falcon generation of processors. We have significantly reduced the signal processing latency time in the associated control system in preparation for upcoming feedback and feed-forward system capabilities, where we’ll be able to control qubits based on classical conditions while the quantum circuit runs.

Next year, we’ll debut our 127-qubit IBM Quantum Eagle processor. Eagle features several upgrades in order to surpass the 100-qubit milestone: crucially, through-silicon vias (TSVs) and multi-level wiring provide the ability to effectively fan-out a large density of classical control signals while protecting the qubits in a separated layer in order to maintain high coherence times. Meanwhile, we’ve struck a delicate balance of connectivity and reduction of crosstalk error with our fixed-frequency approach to two-qubit gates and hexagonal qubit arrangement introduced by Falcon. This qubit layout will allow us to implement the “heavy-hexagonal” error-correcting code that our team debuted last year, so as we scale up the number of physical qubits, we will also be able to explore how they’ll work together as error-corrected logical qubits—every processor we design has fault tolerance considerations taken into account.

With the Eagle processor, we will also introduce concurrent real-time classical compute capabilities that will allow for execution of a broader family of quantum circuits and codes.

The design principles established for our smaller processors will set us on a course to release a 433-qubit IBM Quantum Osprey system in 2022. More efficient and denser controls and cryogenic infrastructure will ensure that scaling up our processors doesn’t sacrifice the performance of our individual qubits, introduce further sources of noise, or take up too large a footprint.

In 2023, we will debut the 1,121-qubit IBM Quantum Condor processor, incorporating the lessons learned from previous processors while continuing to lower the critical two-qubit errors so that we can run longer quantum circuits. We think of Condor as an inflection point, a milestone that marks our ability to implement error correction and scale up our devices, while simultaneously complex enough to explore potential Quantum Advantages—problems that we can solve more efficiently on a quantum computer than on the world’s best supercomputers.

The development required to build Condor will have solved some of the most pressing challenges in the way of scaling up a quantum computer. However, as we explore realms even further beyond the thousand qubit mark, today’s commercial dilution refrigerators will no longer be capable of effectively cooling and isolating such potentially large, complex devices.

That’s why we’re also introducing a 10-foot-tall and 6-foot-wide “super-fridge,” internally codenamed “Goldeneye,” a dilution refrigerator larger than any commercially available today. Our team has designed this behemoth with a million-qubit system in mind—and has already begun fundamental feasibility tests. Ultimately, we envision a future where quantum interconnects link dilution refrigerators each holding a million qubits like the intranet links supercomputing processors, creating a massively parallel quantum computer capable of changing the world.

Knowing the way forward doesn’t remove the obstacles; we face some of the biggest challenges in the history of technological progress. But, with our clear vision, a fault-tolerant quantum computer now feels like an achievable goal within the coming decade.

Members of the IBM Quantum team at work investigating how to control increasingly large systems of qubits for long enough, and with few enough errors, to run the complex calculations required by future quantum applications. Credit: Connie Zhou for IBM

Members of the IBM Quantum team at work investigating how to control increasingly large systems of qubits for long enough, and with few enough errors, to run the complex calculations required by future quantum applications. Credit: Connie Zhou for IBM

The IBM Quantum team builds quantum processors—computer processors that rely on the mathematics of elementary particles in order to expand our computational capabilities, running quantum circuits rather than the logic circuits of digital computers. We represent data using the electronic quantum states of artificial atoms known as superconducting transmon qubits, which are connected and manipulated by sequences of microwave pulses in order to run these circuits. But qubits quickly forget their quantum states due to interaction with the outside world. The biggest challenge facing our team today is figuring out how to control large systems of these qubits for long enough, and with few enough errors, to run the complex quantum circuits required by future quantum applications.

IBM has been exploring superconducting qubits since the mid-2000s, increasing coherence times and decreasing errors to enable multi-qubit devices in the early 2010s. Continued refinements and advances at every level of the system from the qubits to the compiler allowed us to put the first quantum computer in the cloud in 2016. We are proud of our work. Today, we maintain more than two dozen stable systems on the IBM Cloud for our clients and the general public to experiment on, including our 5-qubit IBM Quantum Canary processors and our 27-qubit IBM Quantum Falcon processors—on one of which we recently ran a long enough quantum circuit to declare a Quantum Volume of 64. This achievement wasn’t a matter of building more qubits; instead, we incorporated improvements to the compiler, refined the calibration of the two-qubit gates, and issued upgrades to the noise handling and readout based on tweaks to the microwave pulses. Underlying all of that is hardware with world-leading device metrics fabricated with unique processes to allow for reliable yield.

Simultaneous to our efforts to improve our smaller devices, we are also incorporating the many lessons learned into an aggressive roadmap for scaling to larger systems. In fact, this month we quietly released our 65-qubit IBM Quantum Hummingbird processor to our IBM Q Network members. This device features 8:1 readout multiplexing, meaning we combine readout signals from eight qubits into one, reducing the total amount of wiring and components required for readout and improving our ability to scale, while preserving all of the high performance features from the Falcon generation of processors. We have significantly reduced the signal processing latency time in the associated control system in preparation for upcoming feedback and feed-forward system capabilities, where we’ll be able to control qubits based on classical conditions while the quantum circuit runs.

Next year, we’ll debut our 127-qubit IBM Quantum Eagle processor. Eagle features several upgrades in order to surpass the 100-qubit milestone: crucially, through-silicon vias (TSVs) and multi-level wiring provide the ability to effectively fan-out a large density of classical control signals while protecting the qubits in a separated layer in order to maintain high coherence times. Meanwhile, we’ve struck a delicate balance of connectivity and reduction of crosstalk error with our fixed-frequency approach to two-qubit gates and hexagonal qubit arrangement introduced by Falcon. This qubit layout will allow us to implement the “heavy-hexagonal” error-correcting code that our team debuted last year, so as we scale up the number of physical qubits, we will also be able to explore how they’ll work together as error-corrected logical qubits—every processor we design has fault tolerance considerations taken into account.

With the Eagle processor, we will also introduce concurrent real-time classical compute capabilities that will allow for execution of a broader family of quantum circuits and codes.

The design principles established for our smaller processors will set us on a course to release a 433-qubit IBM Quantum Osprey system in 2022. More efficient and denser controls and cryogenic infrastructure will ensure that scaling up our processors doesn’t sacrifice the performance of our individual qubits, introduce further sources of noise, or take up too large a footprint.

In 2023, we will debut the 1,121-qubit IBM Quantum Condor processor, incorporating the lessons learned from previous processors while continuing to lower the critical two-qubit errors so that we can run longer quantum circuits. We think of Condor as an inflection point, a milestone that marks our ability to implement error correction and scale up our devices, while simultaneously complex enough to explore potential Quantum Advantages—problems that we can solve more efficiently on a quantum computer than on the world’s best supercomputers.

The development required to build Condor will have solved some of the most pressing challenges in the way of scaling up a quantum computer. However, as we explore realms even further beyond the thousand qubit mark, today’s commercial dilution refrigerators will no longer be capable of effectively cooling and isolating such potentially large, complex devices.

That’s why we’re also introducing a 10-foot-tall and 6-foot-wide “super-fridge,” internally codenamed “Goldeneye,” a dilution refrigerator larger than any commercially available today. Our team has designed this behemoth with a million-qubit system in mind—and has already begun fundamental feasibility tests. Ultimately, we envision a future where quantum interconnects link dilution refrigerators each holding a million qubits like the intranet links supercomputing processors, creating a massively parallel quantum computer capable of changing the world.

Knowing the way forward doesn’t remove the obstacles; we face some of the biggest challenges in the history of technological progress. But, with our clear vision, a fault-tolerant quantum computer now feels like an achievable goal within the coming decade.

A look at IBM’s roadmap to advance quantum computers from today’s noisy, small-scale devices to larger, more advance quantum systems of the future. Credit: StoryTK for IBM

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits

IonQ Achieves Industry Leading Performance on Next Generation Barium Qubits